OpenAI's New Neural Network Demonstrates a Frightening Ability to Perfectly Forge Official Documents

OpenAI's latest development, the image generator 4o, has sparked serious concerns due to its capability to create forgeries of various documents, including bills and checks, that are practically indistinguishable from the originals. A worrying example was demonstrated by Didi Das, the head of Menlo Ventures, who published a fake steakhouse check generated by the neural network on social media platform X. The astonishing accuracy of the imitation lay in the fact that the neural network flawlessly reproduced the format and details of a real check, only replacing the actual information with deliberately false data.

Furthermore, the forgery created by Das was additionally processed using a graphic editor, where it was given even greater realism by adding details such as sauce stains, scuffs, and creases. The result was such a convincing imitation that without a direct comparison to the original, recognizing it as a fake appears virtually impossible.

However, the greatest concern lies not so much in the perfection of the forgery, but in the apparent absence of built-in content control mechanisms in OpenAI 4o. It is assumed that these restrictions might have been temporarily disabled during the testing of the new version, which could explain what happened.

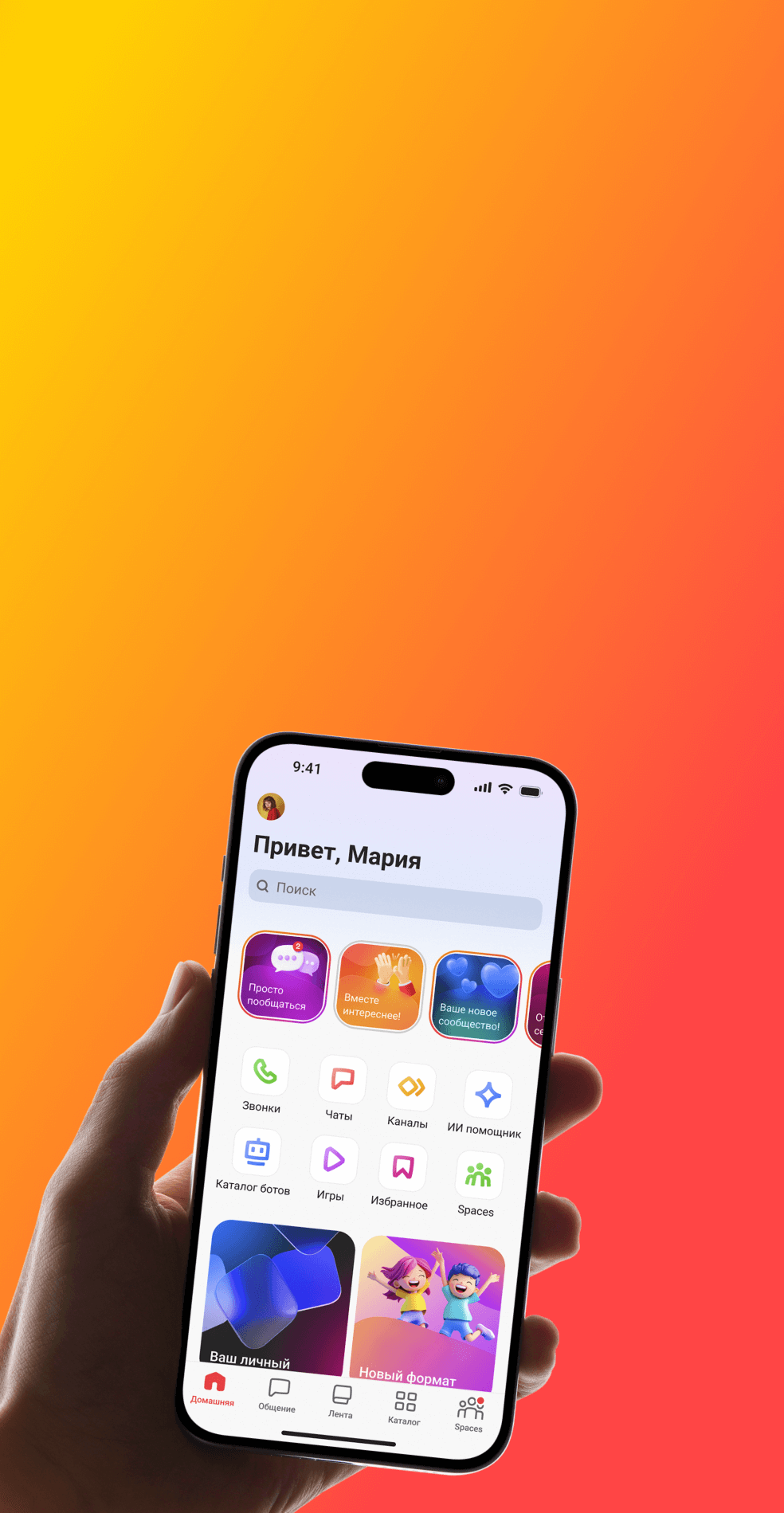

Nevertheless, the responsibility for creating deepfakes lies with the platform creators who disable moderation, failing to block harmful content. In Gem Space, as in a number of other services, there is also the possibility of image generation, but all pictures are generated in a safe mode – without the ability to create illegal or 21+ content.

As Das noted, he managed to effortlessly get the neural network to create an image of a prescription for a prescription antidepressant. Although the possibility of purchasing this drug at a pharmacy with just a picture remains questionable, the very ability of the neural network to generate potentially dangerous fake images raises serious alarm and poses important questions about the safety and ethics of using such technologies.